Model Management

Creating a New Model Set

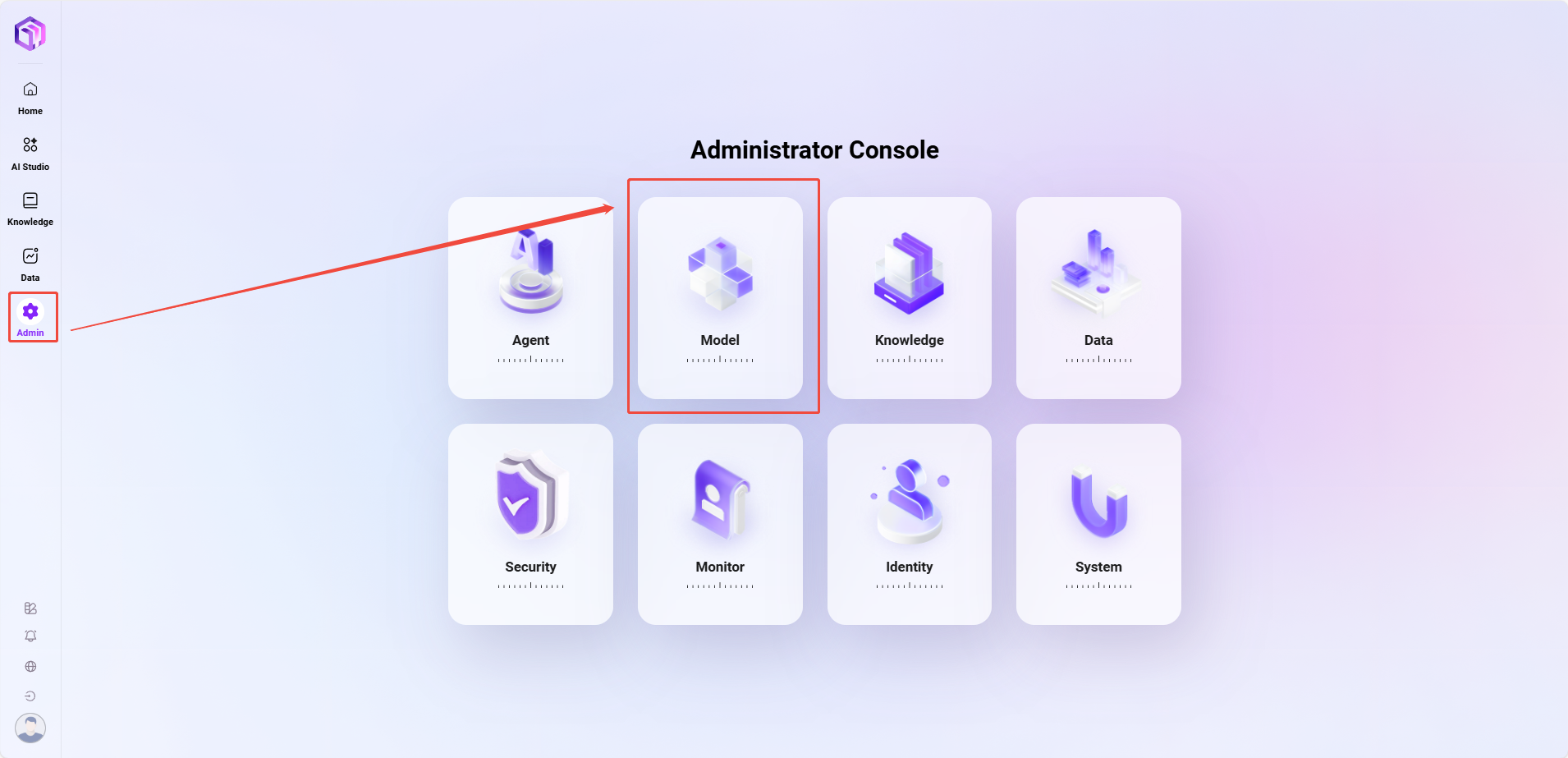

Administrators can create new model sets through the following steps:

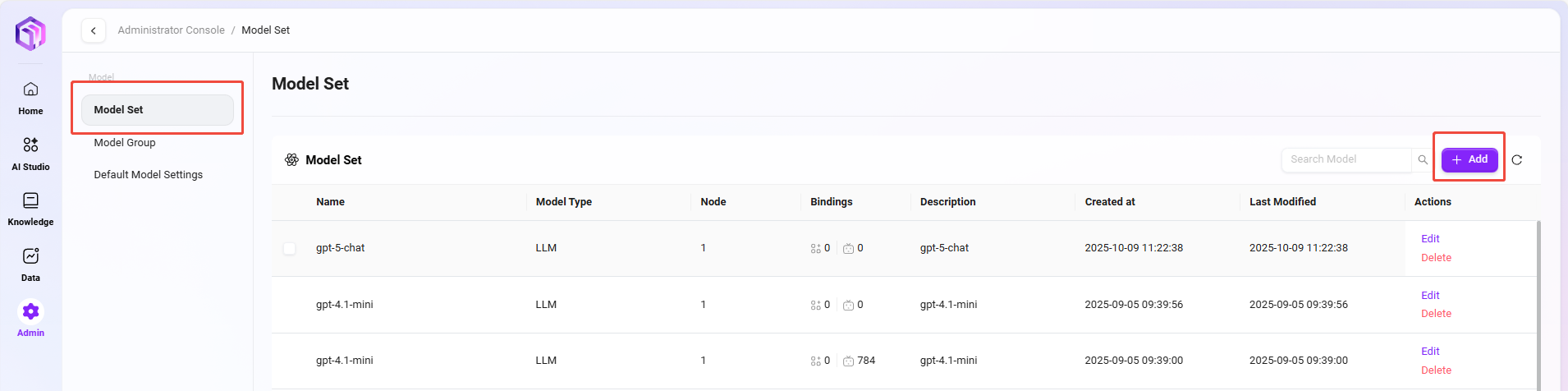

- Navigate to the Model Set Management Page: Go to Model Management, then click "Model Sets".

- Click "New": Click the "New" button on the right side of the page to start creating a new model set.

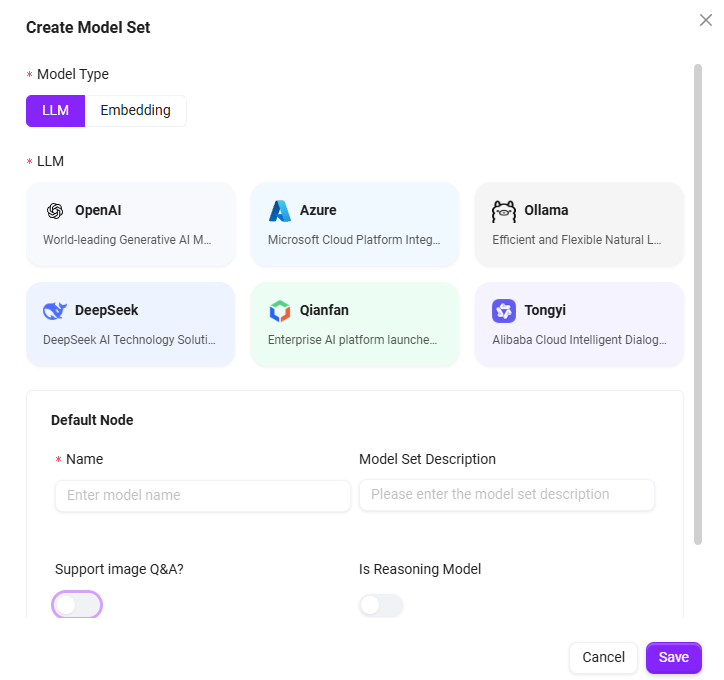

- Select Model Type: In the pop-up window, select the model type. Available types include:

- LLM (Large Language Model)

- Embedding (Embedding Model)

💡 Tip: The system only supports adding one Embedding model.

- Select Language Model: Based on requirements, choose a suitable language model. Currently supported language models include:

- LLM Language Models: OpenAI, Deepseek, Azure, Ollama, Tongyi, Qianfan, Anthropic, VertexAl, AWS AI;

- Embedding Language Models: OpenA Embeddings, Azure Embeddings, AliEmbeddings, Ollama Embeddings, Amazon Bedrock Embeddings, VertexAlEmbeddings;

- Fill in Model Set Information: Enter the model set's name and description, ensuring the information is clear and accurate (name within 50 characters, description within 200 characters).

- Select Additional Settings: Based on needs, choose whether to support image Q&A and whether it is a reasoning model.

- Confirm Creation: After filling in, click the "Confirm" button to complete the creation of the model set.

Through these steps, administrators can successfully create a new model set and configure corresponding settings.

When adding an Embedding model, please note the vector dimension limit:

- PgSQL vector fields support a maximum of 2000 dimensions;

- When purchasing an Embedding model, ensure its output vector dimension is less than 2000 (some models allow adjusting this in configuration);

- When adding a vector model in the system, please fill in a vector dimension value less than 2000, otherwise it may cause storage exceptions or indexing failures.

- Recommended Token context limit: 8192.

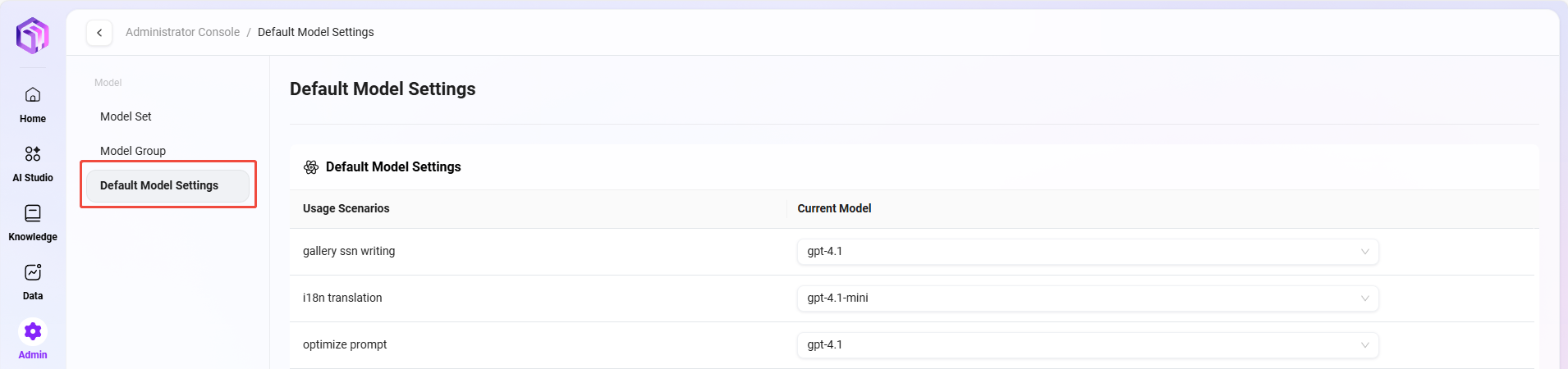

Default Model Settings

In Model Management, administrators can set default models to specify suitable models for different usage scenarios. For example, in scenarios like BI (Business Intelligence) or translation (Translate), the default model can be set to the Azure-4o model. This way, the system will automatically use the preset default model in corresponding scenarios, improving work efficiency and consistency.

The setup steps are similar to creating a model set. Administrators can choose appropriate models as default models for scenarios based on actual needs.

Usage Scenarios

| Function Name | Description | Typical Scenario Examples |

|---|---|---|

| RAG | Combines with knowledge base for retrieval-augmented generation, improving the accuracy and reliability of large model answers | Enterprise knowledge base Q&A, intelligent customer service |

| i18n translation | Implements multi-language translation and interface internationalization, supporting global deployment | AI products for overseas users, international operation platforms |

| gallery ssn writing | Records each user interaction or content generation process, facilitating review and secondary editing | Dialogue history archiving, content creation records, version backtracking |

| gallery rednote | Users can mark key information or add annotations, assisting review and content auditing | Reviewing AI-generated content, user collaborative creation, highlighting key segments |

| gallery mindmap | Transforms text content into structured mind maps, enhancing information understanding | Project organization, knowledge graph generation |

| optimize prompt | Optimizes user-input prompts to improve model understanding and output quality | Assisting rewriting when user input is unclear, low-threshold question optimization |

| recommend question | Automatically recommends the next potentially interesting or related question, improving interaction experience | Chatbot conversation continuation, recommendation guidance |

| gallery chat lead | Provides dialogue guidance templates or "starter phrases" to help users initiate clearer questions or creation requests | Chat template library, creative prompts |

| recommend config | Automatically recommends large model parameter configurations (like temperature, whether to use RAG) based on tasks | Agent configuration panel, low-code/no-code intelligent recommendations |

| pdf_markdown | Parses PDF files into structured Markdown format for easy reading and subsequent processing | Document import into knowledge base, summary generation |

| translate | Automatically translates user input or model output for cross-language communication | Multilingual dialogue, multilingual customer service |

| BI | Uses large models to process structured data and generate visual analysis or business insights | Natural language analysis reports, chart generation, BI Q&A assistant |

| llm_ocr | Extracts text from images into structured text and combines it with large models for semantic understanding | Image Q&A, form recognition, PDF screenshot interpretation, image document search, etc. |

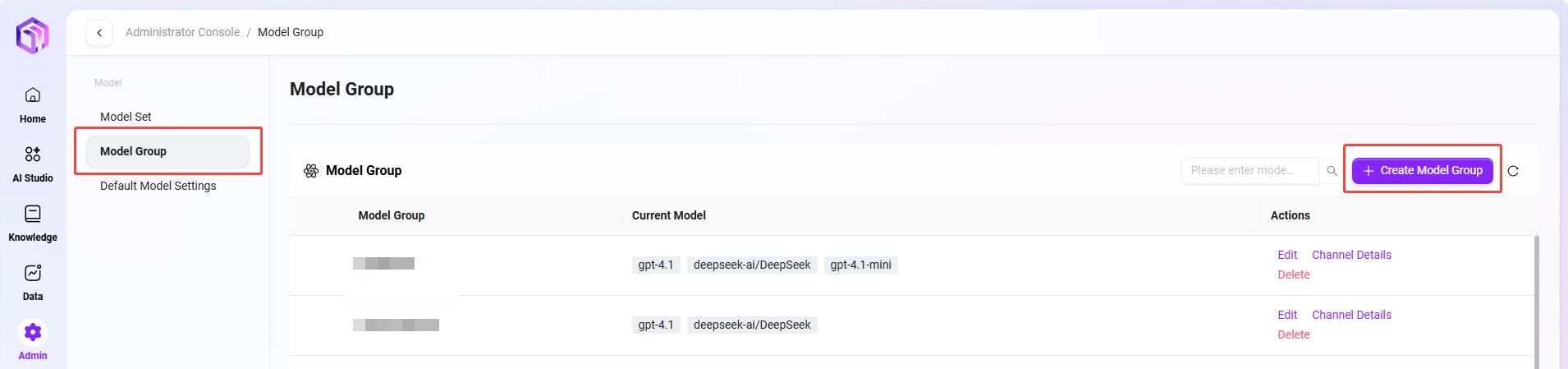

Creating Model Groups

Administrators can create model groups in Model Management. Created model groups can be configured as suitable model groups when creating assistants.

Here are the steps to create a model group:

- Navigate to the Model Group Management Page: Go to Admin, select "Model Management", then click "Model Groups".

- Click "New Model Group": Click the "New Model Group" button on the right side of the page to start creating a new model group.

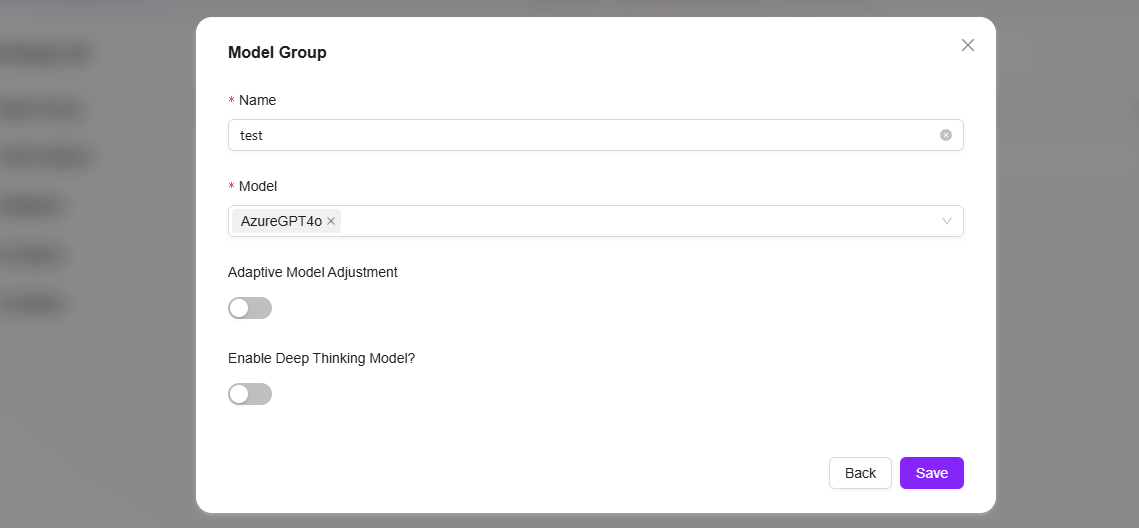

- Enter Model Group Name: Specify a unique name for the model group for easy identification (within 50 characters).

- Select Models: Select the models to include in this model group from the available model list; multiple selections are supported.

- Choose Whether for Adaptive Model Deployment: Based on needs, choose whether to enable the adaptive model deployment function to improve model flexibility and adaptability.

- Choose Whether to Enable Deep Think Model: As needed, choose whether to enable the deep think model to enhance the model's intelligent processing capabilities.

- Click "Save": After confirming all settings are correct, click the "Save" button to successfully create the model group.

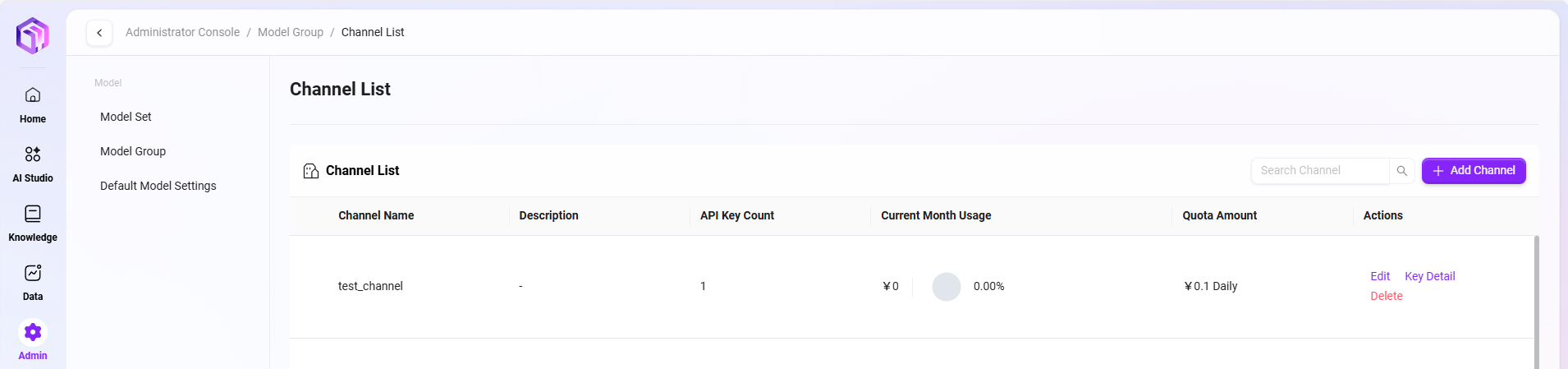

Model Group Channel Details

After creating a new model group, you can enter the "Channel Details" page to view all configured channels. You can, based on needs:

- Create New Channel: Create new call channels for the model group.

- Manage Keys: Click "Key Details" on the right to view all key information under that channel and support creating new keys.

This page facilitates unified management of each channel and their corresponding API Keys.

API Key Feature Explanation

Our platform's API Keys possess a high degree of independence and authorization power. Each API Key is equivalent to an independent "pass" with complete access and call permissions. Specific explanations are as follows:

-

Independent of Platform User System The calling party holding the API Key does not need to be a registered user of the platform nor possess any specific user permissions. As long as the request carries a valid API Key, it will be considered a legally authorized request, and the system will process and respond normally.

-

Not Limited by License Calls initiated through API Keys do not consume the platform's user License quota. Therefore, even if the number of actual registered users on the enterprise's platform is limited, it can flexibly support more service access and business scenarios through API Keys, achieving large-scale use without needing additional authorization or capacity expansion.

-

Flexible Configuration as Needed Different API Keys can be configured with different validity periods, permission scopes (such as access modules, data ranges, etc.) as needed, adapting to different integrated systems or business parties. It is recommended to generate independent API Keys for each integrated party for subsequent management and usage tracking.

⚠️ Security Warning: Please properly manage API Keys to avoid leakage. Once an API Key is misused externally, all requests initiated through that Key will have full permissions by default, which may pose risks to data and system security.

Agent Dialogue Interface Upgrade

💡 Tip: This feature is only supported in V4.1.2 and later versions

On the basis of the original API Key call method, a new User Token access to Agent API SSE (Server-Sent Events) session management method has been added.

- User Authentication Upgrade: Solves the problem that traditional API Key methods cannot identify specific user identities. Supports third-party applications calling through user-level tokens (replacing unified API Keys) to achieve precise tracing of user request sources.

- Context Management Optimization: Supports third-party applications "actively" controlling dialogue context, allowing flexible creation of new sessions or continuation of previous dialogues, enhancing dialogue coherence and management flexibility.

- Compatibility Enhancement: The new calling method can be debugged and called using API testing tools like Apifox, facilitating development and integration testing.

This upgrade is particularly suitable for third-party application integration scenarios requiring precise user identity identification, providing finer control capabilities for Agent calls in multi-user environments.

Model Configuration Explanation

This product supports integrating the following enhanced features, all relying on services provided by Azure or external platforms. You need to go to the relevant platforms yourself according to your own needs and obtain necessary access credentials (API Key, Endpoint, etc.):

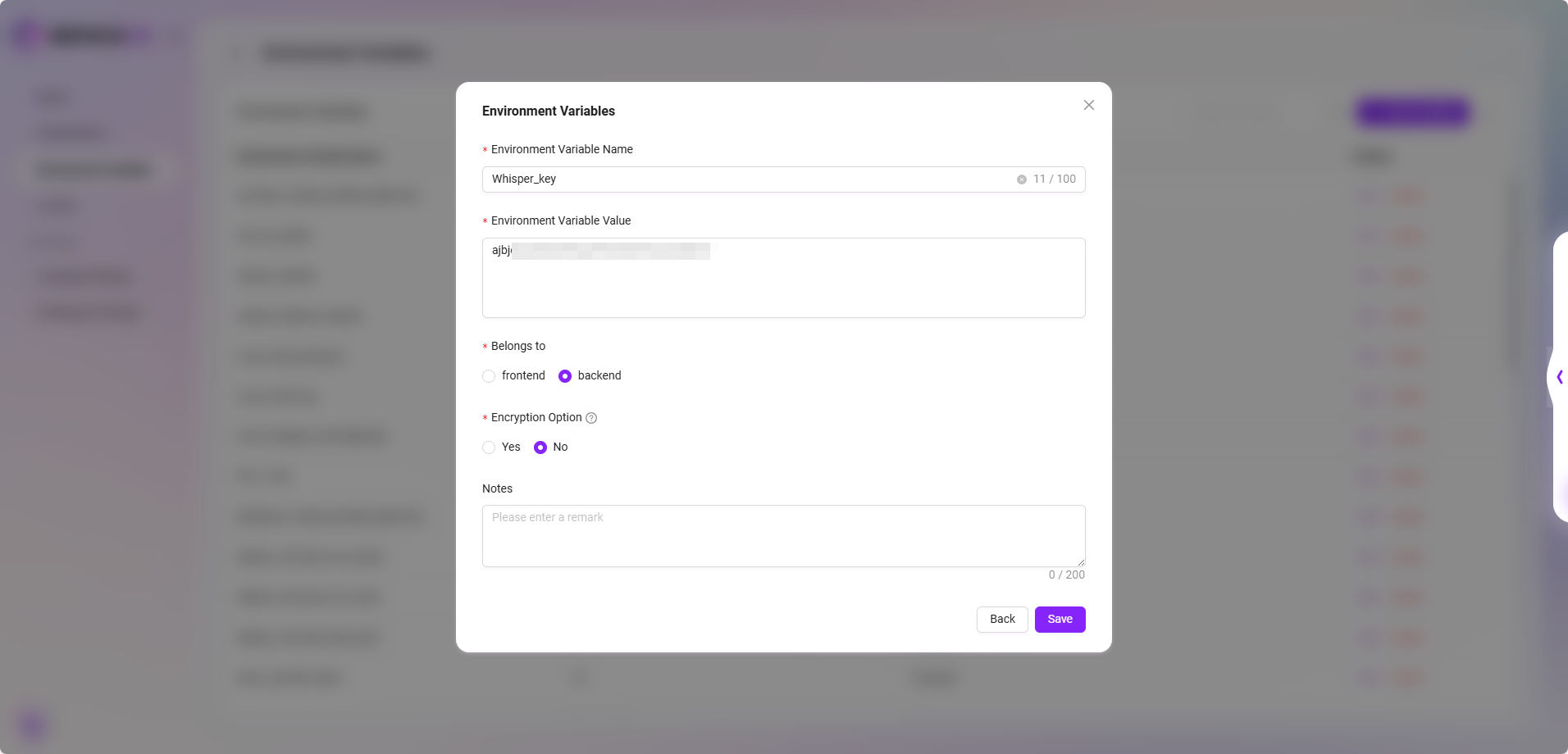

- Voice Input Function (Whisper Service)

- Service Description: Achieves speech-to-text functionality by purchasing and deploying the Whisper service on Azure.

- Configuration Method: Supports configuring multiple Whisper Keys through environment variables; variable names must clearly contain "Whisper"; if not configured, the voice input button will not be displayed.

- Compatibility Note: Also supports configuring the Whisper service through initialization SQL. Configuration content will be automatically written into environment variables, supporting later modifications.

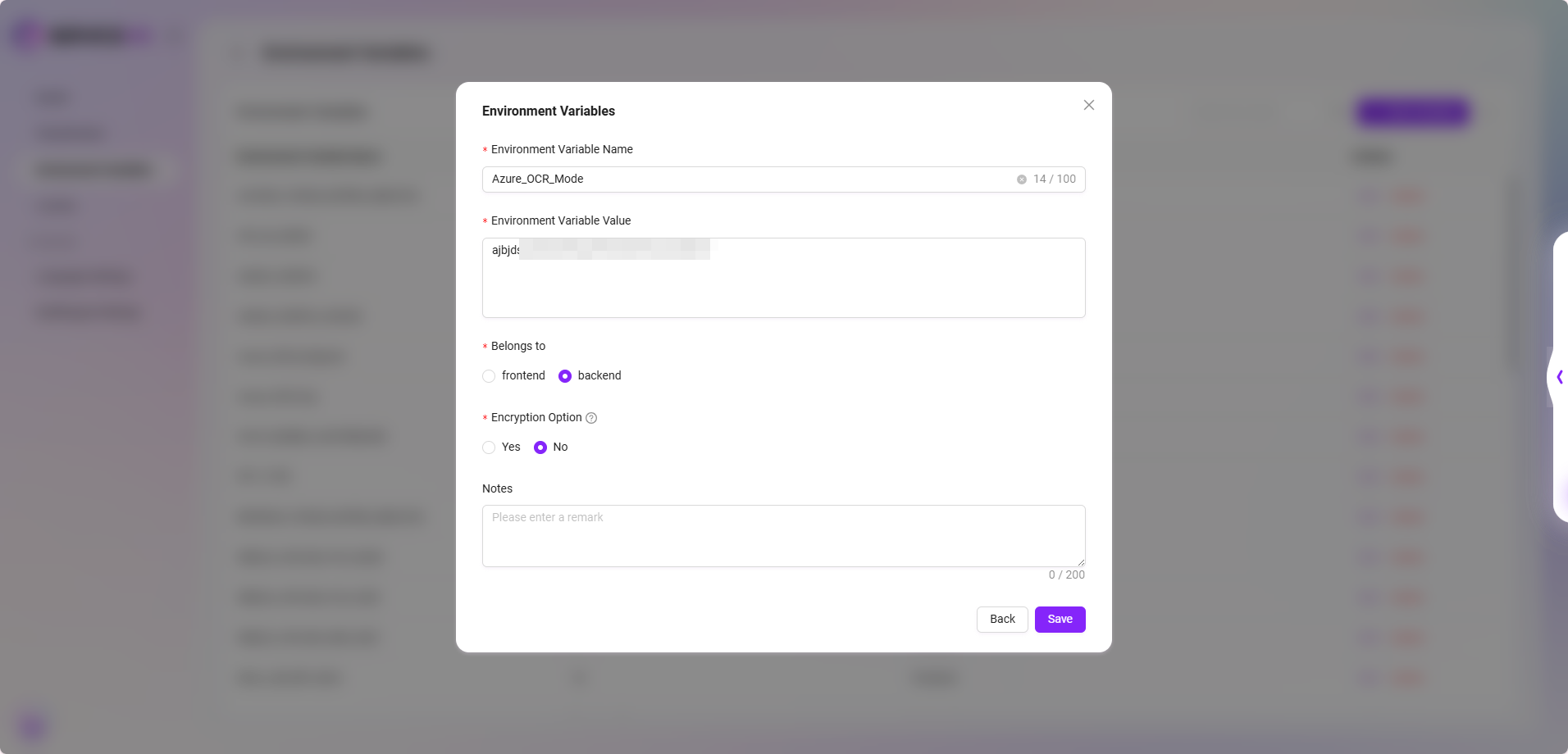

- Azure OCR Mode (Azure Document Intelligence)

- Service Description: Achieves OCR capabilities based on Azure Document Intelligence, supporting "Basic" and "Advanced" recognition modes.

- Configuration Method: Requires setting Azure OCR mode KEY and Endpoint in environment variables; if not configured, this OCR mode cannot be selected.

- Interaction Prompt: The interface will automatically display selectable modes based on configuration status and restrict selection of invalid options.

- If you need to purchase services or obtain API Keys and Endpoints, please visit the Microsoft Azure official website or relevant service provider pages and choose appropriate pricing plans as needed.

- We recommend customers prioritize evaluating data security, response timeliness, and price factors before configuration. If deployment support is needed, please contact the technical support team.